GCP Integrations

Requirements

The GCP integration requires a credentials file in JSON format containing the private key to access Google Cloud Pub/Sub or Google Cloud Storage bucket.

- project_id: This tag indicates the Google Cloud project ID.

- subscription_name: This string specifies the name of the subscription to read from.

- credentials_file (Attach credentials.json): This setting specifies the path to the Google Cloud credentials file in JW Tokens.

- bucket_name

Architecture

- Configuring GCP credentials

- Configuring Google Cloud Pub/Sub

- Considerations for configuration

Configuring GCP credentials

In order to make the Zensor GCP module pull log data from Google Pub/Sub or Google Storage, it will be necessary to provide access credentials so that it can connect to them.To do this, it is recommended to create a service account with the Pub/Sub or Storage permissions and then create a key. It is important to save this key as a JSON file as it will be used as the authentication method for the GCP module.

Creating a service account

Within the Service Accounts section, create a new service account and add the following roles depending on which module to use: gcp-pubsub, gcp-bucket, or both.

For gcp-pubsub, add two roles with Pub/Sub permissions: Pub/Sub Publisher and Pub/Sub Subscriber.

For gcp-bucket, add the following role with Google Cloud Storage bucket permissions: Storage Legacy Bucket Writer.

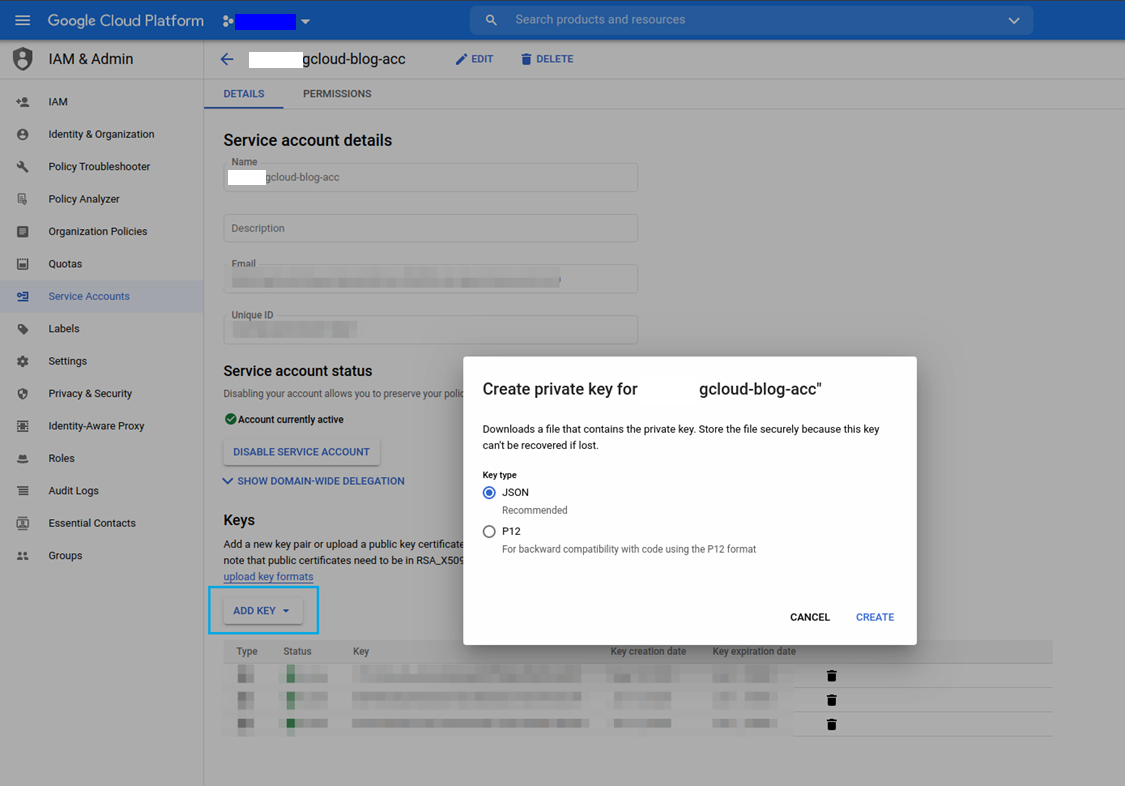

Creating a private key

After creating a service account, add a new key to it. To do this, click Create Key, select JSON, and click Create to complete the action.

{

"type": "service_account",

"project_id": "zensor-gcloud-123456",

"private_key_id": "1f7578bcd3e41b54febdac907f9dea7b5d1ce352",

"private_key": "-----BEGIN PRIVATE KEY-----\n \n-----END PRIVATE KEY-----\n",

"client_email": "zensor-mail@zensor-gcloud-123456.iam.gserviceaccount.com",

"client_id": "102784232161964177687",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/zensor-gcloud-acc%40zensor-gcloud-123456.iam.gserviceaccount.com"

}

Check the official Google Cloud Pub/Sub documentation to learn more about how to configure the GCP credentials JSON file.

Authentication options

Currently, the GCP integration only allows the credentials to be provided using an authentication file.

Using an authentication file

As explained before, the GCP integration requires a credentials file in JSON format containing the private key to access Google Cloud Pub/Sub or Google Cloud Storage bucket.

project_id

subscription_name

credentials_file (Attach credentials.json)

For google cloud storage bucket

bucket_name

project_id

subscription_name

credentials_file (Attach credentials.json)

Configuring Google Cloud Pub/Sub

Google Cloud Pub/Sub is a fully-managed real-time messaging service that allows you to send and receive messages between independent applications.

We use it to get security events from the Google Cloud instances without creating a special logic to avoid reprocessing events.

In this section, we see how to create a topic, a subscription, and a sink to fully configure Google Cloud Pub/Sub to work with Zensor.

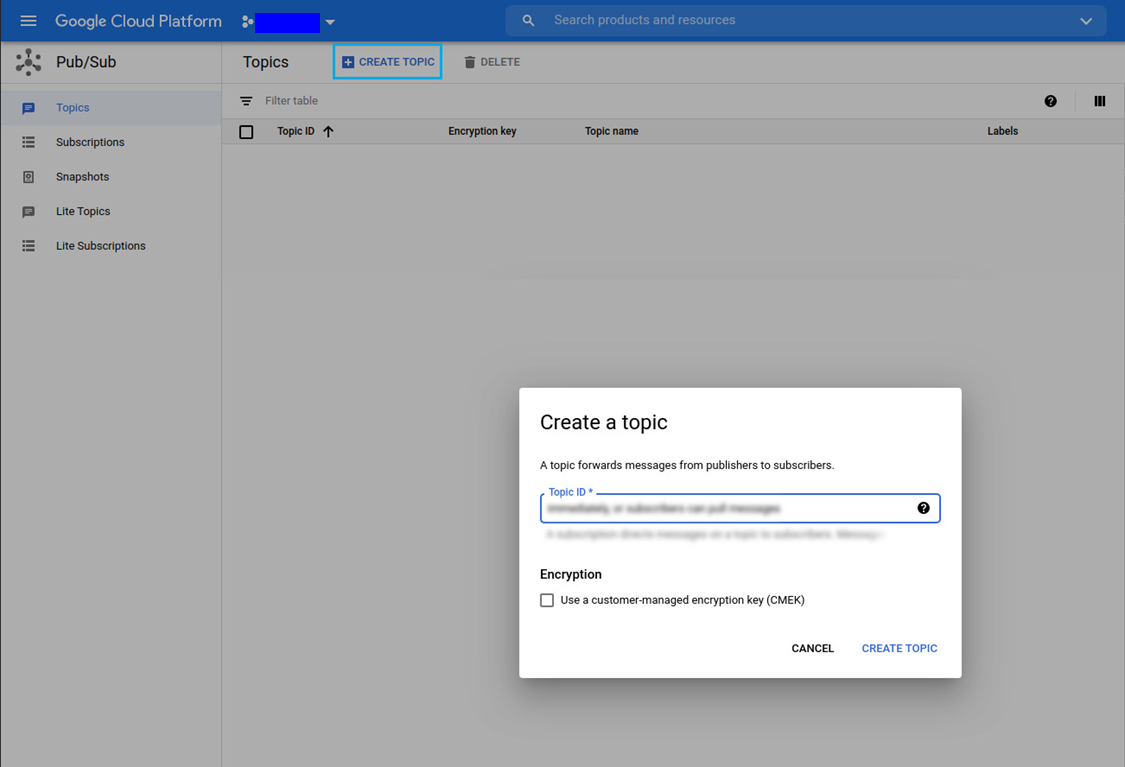

Create a topic

Every publishing application sends messages to topics. Zensor will retrieve the logs from this topic.

Get your credentials

If you do not have credentials yet, follow the steps in the credentials section

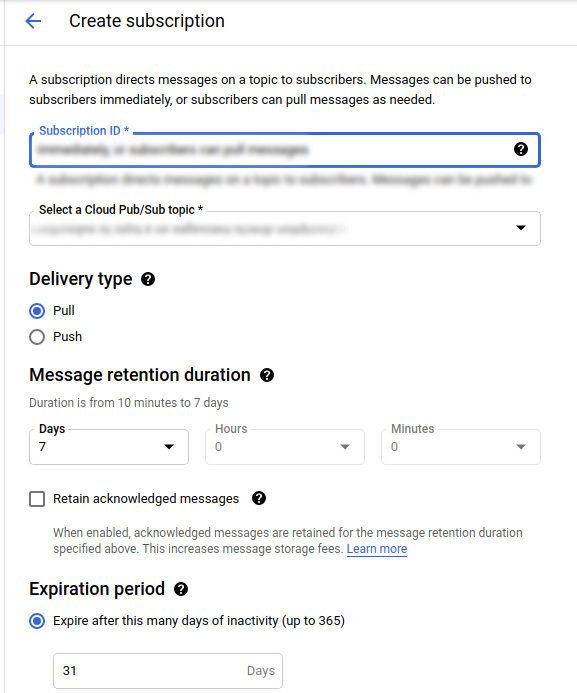

Create a subscription

Follow the steps below to fill in the Create subscription form:

- Fill in the Subscription ID

- Select a topic from Select a Cloud Pub/Sub topic

- Choose Pull in the Delivery type field

- Select the duration of the Message retention duration

- Select the duration in days of the Expiration period

At this point, the Pub/Sub environment is ready to manage the message flow between the publishing and subscribing applications.

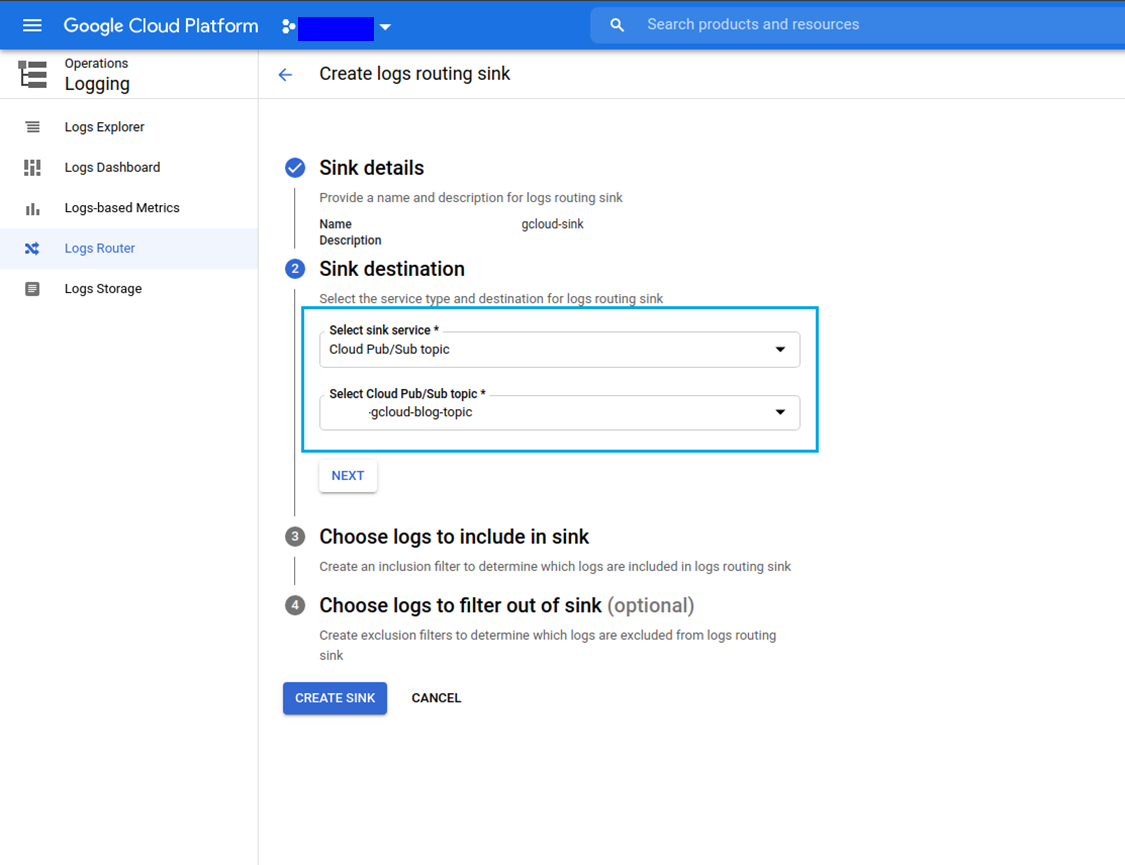

Export logs via sink

Log activities should appear under the Logs Router section. Cloud Audit logs can be published to a Cloud Pub/Sub topic through the sinks. Create a sink and use the topic as a destination.

Follow the steps below to complete the Create logs routing sink form:

- Sink details: provide a name and description for logs routing sink

- Sink destination: select the sink service type and destination

- Choose logs to include in sink: create an inclusion filter to determine which logs are included

- Choose logs to filter out to sink: create exclusion filters to determine which logs are excluded

- Click the CREATE SINK button.

After you set everything up, you should see activity in the Log Viewer section. Follow the link if you need help setting up Cloud Pub/Sub topic and subscription.